The capabilities of Azure Virtual WAN

A critical component in any cloud platform is how to manage networking. At least if you have other clouds and/or on-premise to respect and their IP-ranges. Central parts of this is network topology and how to manage IPAM (IP Address Management).

Luckily nowadays, there is an IPAM feature in Azure. Specifically in the Azure Virtual Network Manager service. This is not what we will cover today but that could be a future post & video if it sounds interesting?

I recently started looking into Azure Virtual WAN since I needed to establish a new platform in Azure greenfield and where networking would be an important part of it. I've always setup networking quite "manual" if you can call it that. Using a third-party system for IPAM and configured my hub-spoke using that information. But that also meant I would have one repository with code for the central networking/connectivity parts and other respositories for other spokes where I would use VNET peering.

There is nothing wrong with that but I have always wanted to try Azure VWAN since it's now in general availability, even though some features may still be in preview or have preview flags.

In this post I will share my findings from my research, share some code I wrote to configure some parts of it with Terraform and the AzAPI provider and finally share my sources for information under references.

My findings

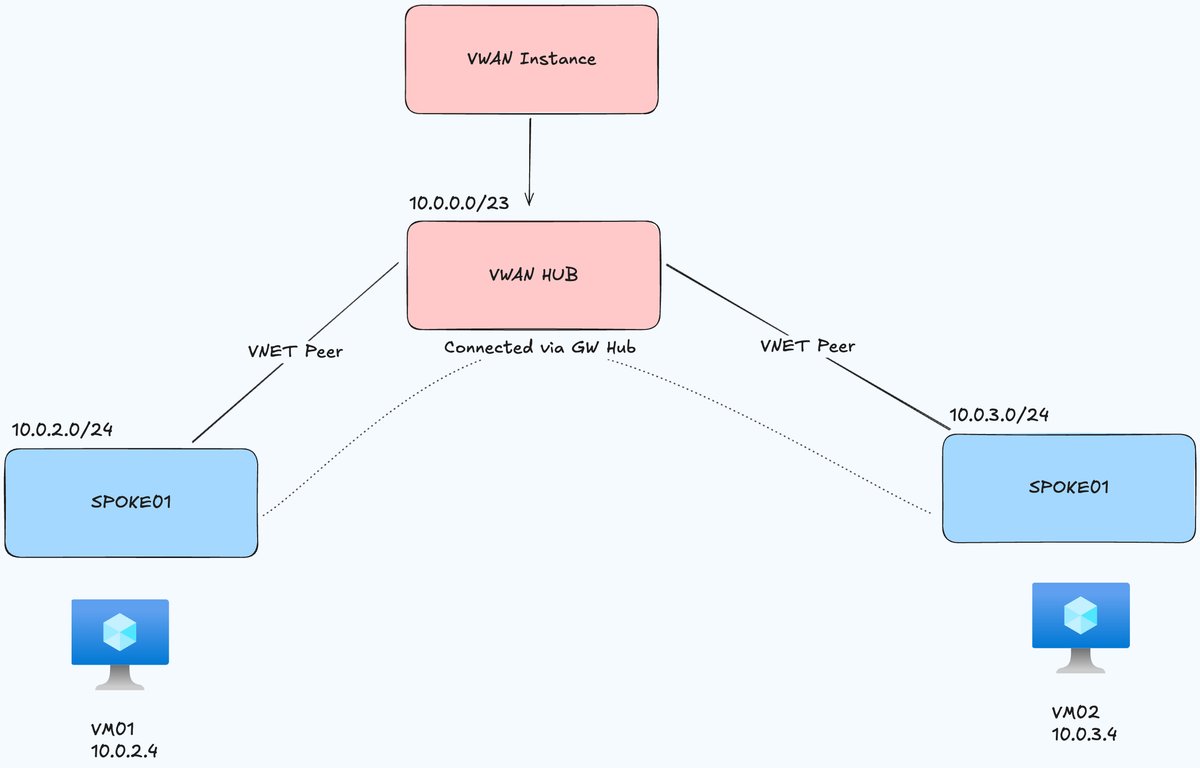

With Azure Virtual WAN you have at a high overview level the following items you are working with:

- The instance itself: Typically you should only ever have one no matter how many regions you operate

- Hubs: This is a managed azure virtual network which can act as a route server for vnet-to-vnet transative communiation, host VPN gateway, Firewall if its a secure HUB or some other 3rd-party NVA. One per region is a safe bet.

- Spokes: This can be an Azure VNET, a S2S VPN device, SD-WAN device, P2s user etc. Basically "anything else" that is connected to the Azure Virtual WAN hubs.

There are two SKUs for Azure Virtual WAN which is basic and standard.

Basic only supports S2S VPN connections and there is no transit between virtual networks that you peer with your HUB, at least not per default.

Standard has all the basic features with transative VNET connections working out of the box so your two VNETs that you peer can communicate (you can isolate VNETs). In addition to that, you get all the other cool features such as you can support P2S, Express Route with Premium Global Reach and if you have more than one hub these connect to eachother and gives us full mesh between any and all devices that connect to these hubs.

Full mesh means that any device can talk to any other device in a network.

Hubs share a lot as you can see. However, if you are running Secure Hub which means a HUB network with an Azure Firewall, each hub needs its own Firewall as they cannot share these amongst eachother. The recommendation for each hub seems to be giving it at least a size of /23 so it can support any type of network appliance that you may or may not deploy inside it.

Lab setup

I wanted to give the service a go creating all the items with Terraform. Lately I have been trying to use the AzAPI provider more as well before just defaulting to AzureRM - so lets see how that works out.

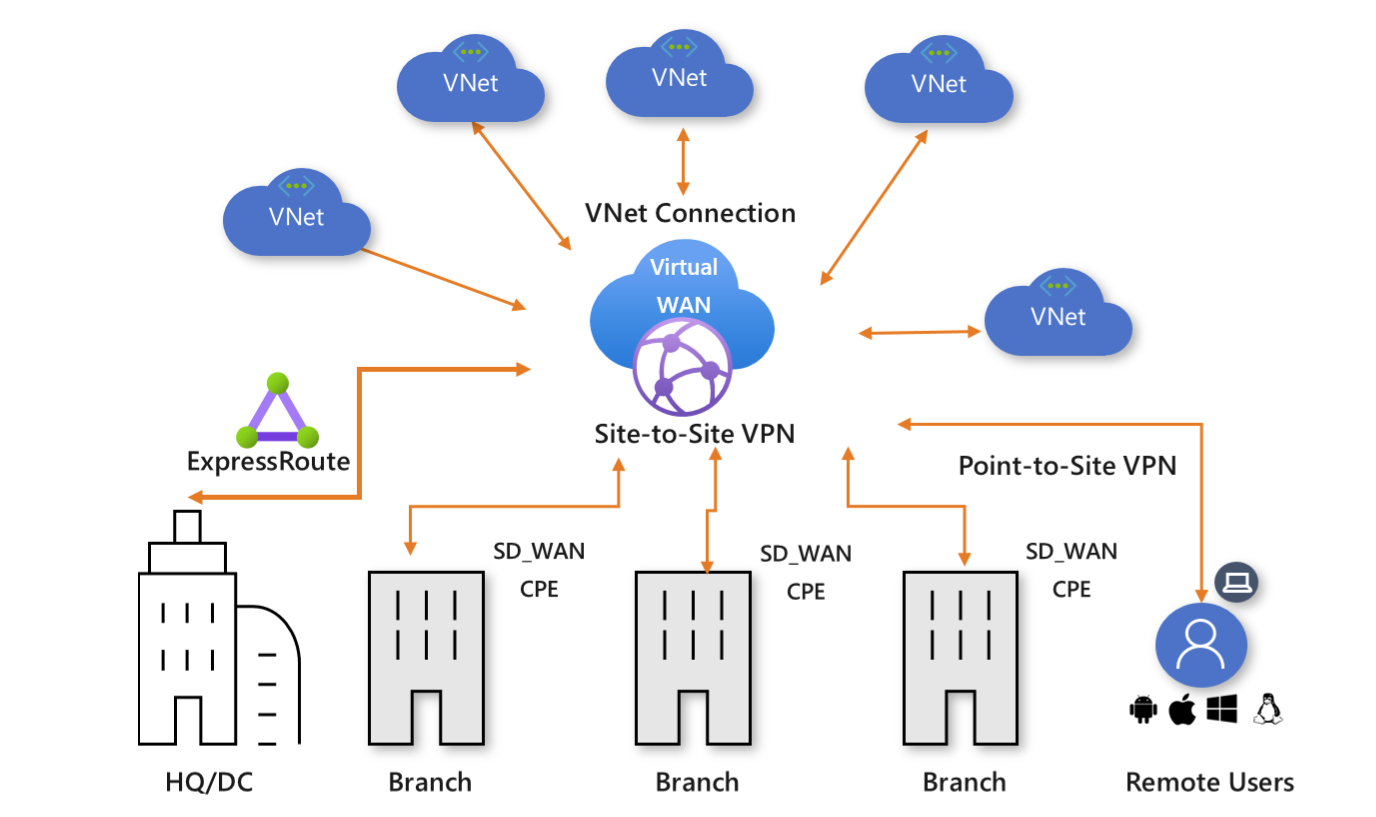

What we want to build is this:

- 1x Virtual WAN instance

- 1x VWAN Hub

- 2x Virtual Networks (Spokes) with subnets

- 2x VNET Peers between Hub and Spokes

- 2x VMs to test connectivity

First I create a main.tf and add the terraform, provider , rg and vwan resources

terraform {

required_providers {

azapi = {

source = "Azure/azapi"

version = "2.4.0"

}

}

}

provider "azapi" {

enable_preflight = true

}

resource "azapi_resource" "rg" {

type = "Microsoft.Resources/resourceGroups@2025-04-01"

name = "rg-${var.environment}-${var.location_short}-vwan"

location = var.location

}

resource "azapi_resource" "vwan_instance" {

type = "Microsoft.Network/virtualWans@2024-05-01"

name = "vwan-${var.environment}-${var.location_short}-vwan"

parent_id = azapi_resource.rg.id

location = var.location

body = {

properties = {

allowBranchToBranchTraffic = true

disableVpnEncryption = false

allowVnetToVnetTraffic = true

type = "Standard"

}

}

}

resource "azapi_resource" "vwan_hub" {

type = "Microsoft.Network/virtualHubs@2024-05-01"

name = "vhub-${var.environment}-${var.location_short}-vwan"

parent_id = azapi_resource.rg.id

location = var.location

body = {

properties = {

addressPrefix = "10.0.0.0/23"

hubRoutingPreference = "VpnGateway"

virtualRouterAutoScaleConfiguration = {

minCapacity = 2

}

virtualWan = {

id = azapi_resource.vwan_instance.id

}

}

}

}That is all the VWAN stuff, in the same file lets add the spoke VNETs and Subnets

resource "azapi_resource" "spoke-vnet" {

type = "Microsoft.Network/virtualNetworks@2024-05-01"

name = "vnet-${var.environment}-${var.location_short}-vwan"

parent_id = azapi_resource.rg.id

location = var.location

body = {

properties = {

addressSpace = {

addressPrefixes = ["10.0.2.0/24"]

}

}

}

}

resource "azapi_resource" "subnet" {

type = "Microsoft.Network/virtualNetworks/subnets@2024-05-01"

name = "sn-vwan-workload"

parent_id = azapi_resource.spoke-vnet.id

body = {

properties = {

addressPrefix = "10.0.2.0/24"

}

}

}

resource "azapi_resource" "spoke-vnet2" {

type = "Microsoft.Network/virtualNetworks@2024-05-01"

name = "vnet-${var.environment}-${var.location_short}-vwan2"

parent_id = azapi_resource.rg.id

location = var.location

body = {

properties = {

addressSpace = {

addressPrefixes = ["10.0.3.0/24"]

}

}

}

}

resource "azapi_resource" "subnet2" {

type = "Microsoft.Network/virtualNetworks/subnets@2024-05-01"

name = "sn-vwan-workload"

parent_id = azapi_resource.spoke-vnet2.id

body = {

properties = {

addressPrefix = "10.0.3.0/24"

}

}

}

resource "azapi_resource" "vhub-spoke-connectivity" {

type = "Microsoft.Network/virtualHubs/hubVirtualNetworkConnections@2024-05-01"

parent_id = azapi_resource.vwan_hub.id

name = "vnet-connection-${azapi_resource.vwan_hub.name}-to-${azapi_resource.spoke-vnet.name}"

body = {

properties = {

enableInternetSecurity = false

remoteVirtualNetwork = {

id = azapi_resource.spoke-vnet.id

}

}

}

schema_validation_enabled = false

}

resource "azapi_resource" "vhub-spoke-connectivity2" {

type = "Microsoft.Network/virtualHubs/hubVirtualNetworkConnections@2024-05-01"

parent_id = azapi_resource.vwan_hub.id

name = "vnet-connection-${azapi_resource.vwan_hub.name}-to-${azapi_resource.spoke-vnet2.name}"

body = {

properties = {

enableInternetSecurity = false

remoteVirtualNetwork = {

id = azapi_resource.spoke-vnet2.id

}

}

}

schema_validation_enabled = false

}The really nice part about this is that it is enough with one peering block for the hub and the spoke to be able to fully connect and synchronize.

We have now added everything except for the two virtual machines which we want to test connectivity between. For simplicity of reading I add these to a vm1.tf and a vm2.tf file:

locals {

os_disk_name = "myosdisk1"

data_disk_name = "mydatadisk1"

attached_data_disk_name = "myattacheddatadisk1"

}

resource "azapi_resource" "networkInterface" {

type = "Microsoft.Network/networkInterfaces@2022-07-01"

parent_id = azapi_resource.rg.id

name = "nic-vm1"

location = var.location

body = {

properties = {

enableAcceleratedNetworking = false

enableIPForwarding = false

ipConfigurations = [

{

name = "testconfiguration1"

properties = {

primary = true

privateIPAddressVersion = "IPv4"

privateIPAllocationMethod = "Dynamic"

subnet = {

id = azapi_resource.subnet.id

}

}

},

]

}

}

schema_validation_enabled = false

response_export_values = ["*"]

}

resource "azapi_resource" "virtualMachine" {

type = "Microsoft.Compute/virtualMachines@2023-03-01"

parent_id = azapi_resource.rg.id

name = "vm1"

location = var.location

body = {

properties = {

hardwareProfile = {

vmSize = "Standard_F2"

}

networkProfile = {

networkInterfaces = [

{

id = azapi_resource.networkInterface.id

properties = {

primary = false

}

},

]

}

osProfile = {

adminPassword = var.vm_password

adminUsername = "localadmin"

computerName = "hostname230630032848831819"

linuxConfiguration = {

disablePasswordAuthentication = false

}

}

storageProfile = {

imageReference = {

offer = "UbuntuServer"

publisher = "Canonical"

sku = "16.04-LTS"

version = "latest"

}

osDisk = {

caching = "ReadWrite"

createOption = "FromImage"

name = local.os_disk_name

writeAcceleratorEnabled = false

}

}

}

}

schema_validation_enabled = false

response_export_values = ["*"]

}Not my best terraforming here but I just wanted to quick VMs to test with...

locals {

os_disk_name2 = "myosdisk2"

data_disk_name2 = "mydatadisk2"

attached_data_disk_name2 = "myattacheddatadisk1"

}

resource "azapi_resource" "networkInterface2" {

type = "Microsoft.Network/networkInterfaces@2022-07-01"

parent_id = azapi_resource.rg.id

name = "nic-vm2"

location = var.location

body = {

properties = {

enableAcceleratedNetworking = false

enableIPForwarding = false

ipConfigurations = [

{

name = "testconfiguration1"

properties = {

primary = true

privateIPAddressVersion = "IPv4"

privateIPAllocationMethod = "Dynamic"

subnet = {

id = azapi_resource.subnet2.id

}

}

},

]

}

}

schema_validation_enabled = false

response_export_values = ["*"]

}

resource "azapi_resource" "virtualMachine2" {

type = "Microsoft.Compute/virtualMachines@2023-03-01"

parent_id = azapi_resource.rg.id

name = "vm2"

location = var.location

body = {

properties = {

hardwareProfile = {

vmSize = "Standard_F2"

}

networkProfile = {

networkInterfaces = [

{

id = azapi_resource.networkInterface2.id

properties = {

primary = false

}

},

]

}

osProfile = {

adminPassword = var.vm_password

adminUsername = "localadmin"

computerName = "hostname230630032848831819"

linuxConfiguration = {

disablePasswordAuthentication = false

}

}

storageProfile = {

imageReference = {

offer = "UbuntuServer"

publisher = "Canonical"

sku = "16.04-LTS"

version = "latest"

}

osDisk = {

caching = "ReadWrite"

createOption = "FromImage"

name = local.os_disk_name2

writeAcceleratorEnabled = false

}

}

}

}

schema_validation_enabled = false

response_export_values = ["*"]

}Again.. Not my best terraforming here but I just wanted to quick VMs to test with...

Finally here is my variables.tf

variable "environment" {

description = "The environment for which the resources are being created (e.g., dev, staging, prod)."

type = string

}

variable "location" {

description = "The Azure region where the resources will be deployed."

type = string

}

variable "location_short" {

description = "Short name for the Azure region, used in resource names."

type = string

}

variable "vm_password" {

type = string

sensitive = true

}

For the test

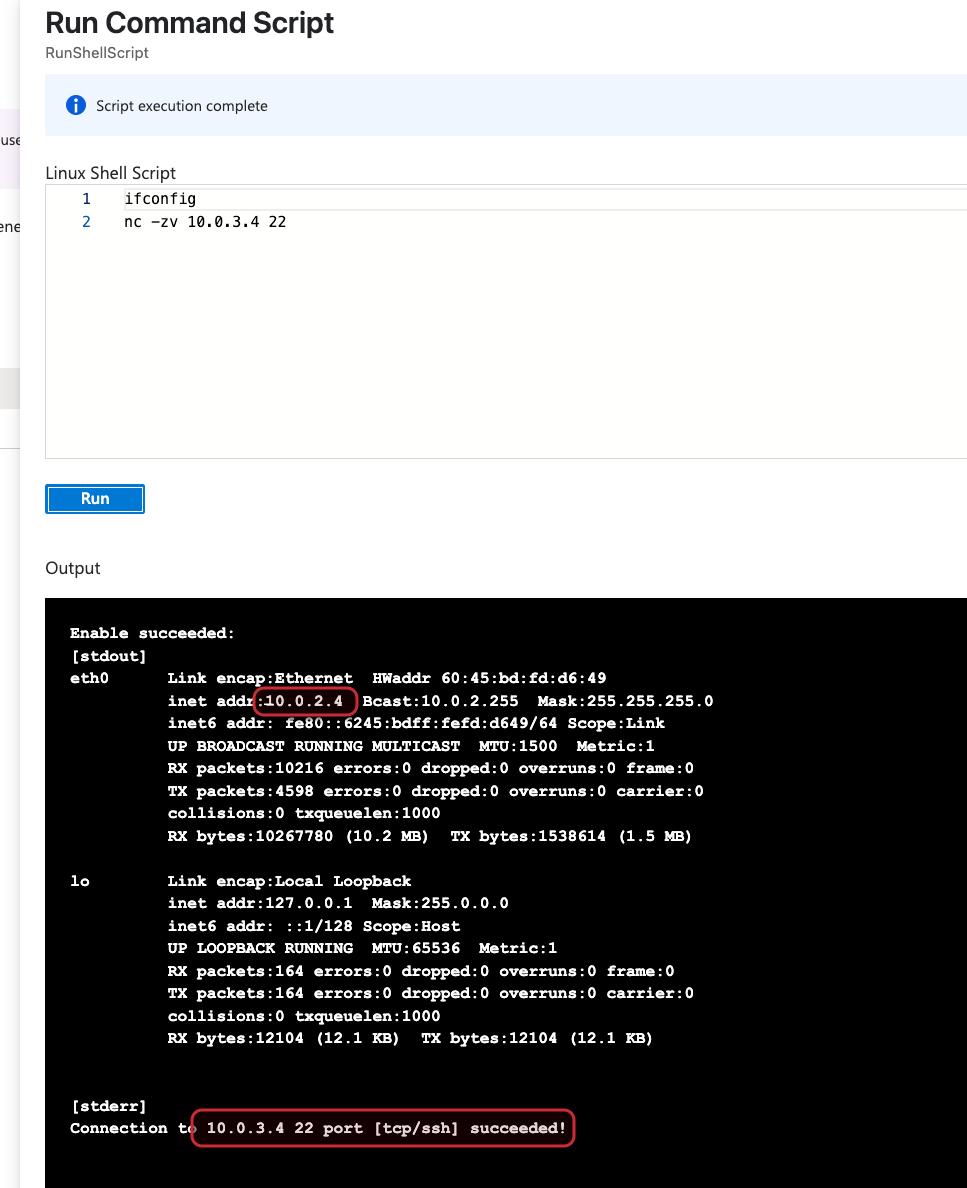

Now that we have everything we need I will head to one of the virtual machines in Azure and use the Run Command feature. I will run a NetCat from VM1 to VM2 to check if tcp/22 is reachable.

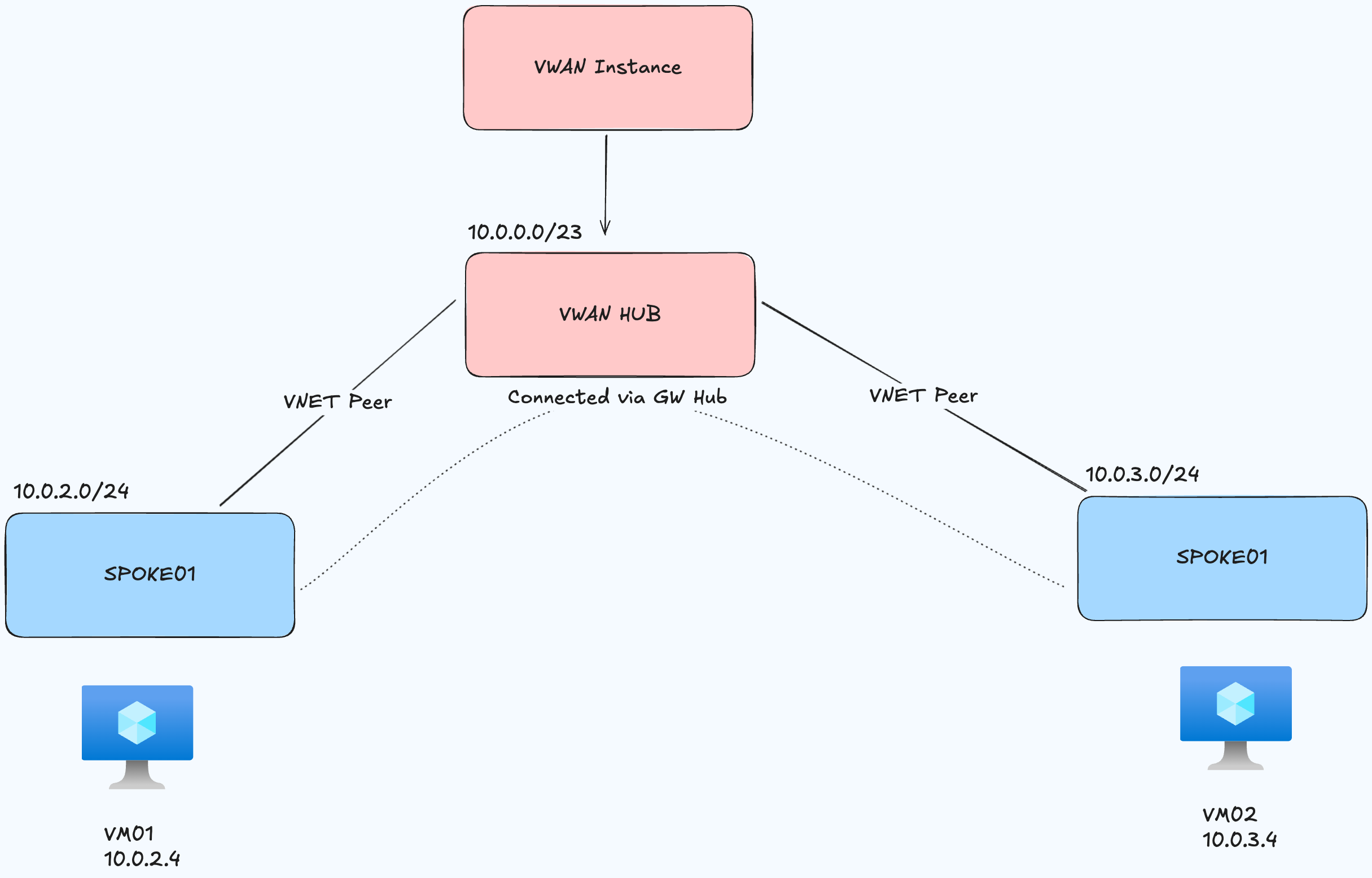

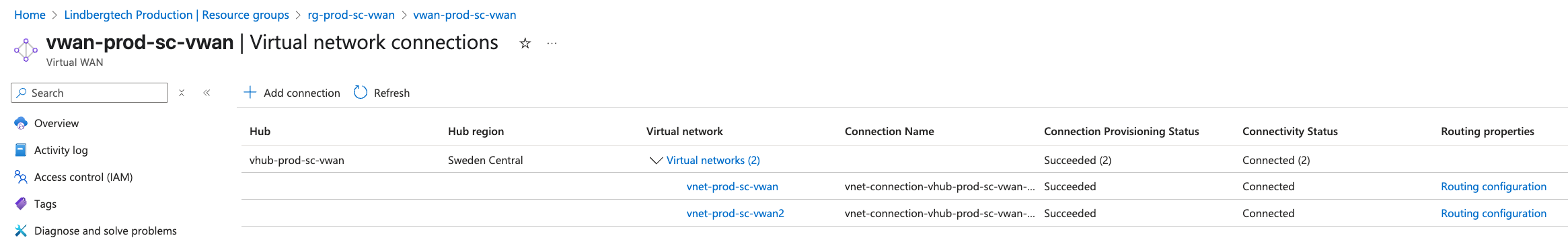

First, here is my HUB with its two peered spokes (virtual networks):

Here we can see a successful connection from VM1 to VM2:

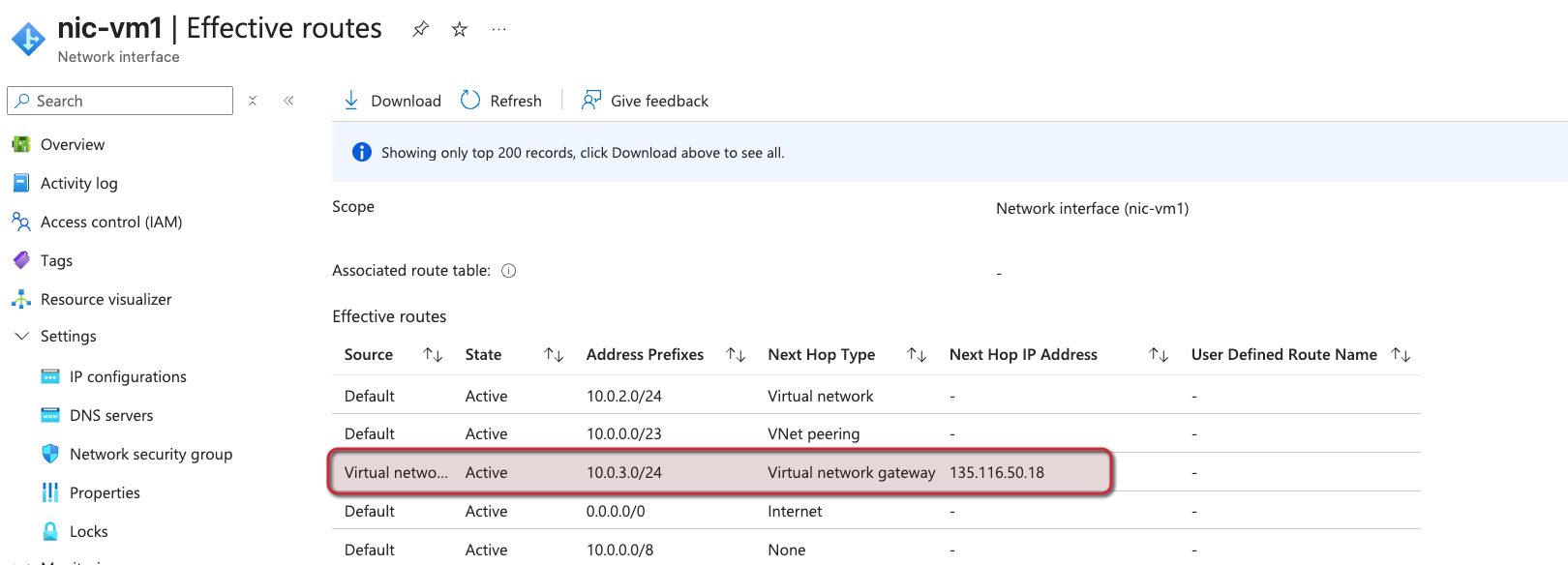

If we go to the effective routes of VM1s NIC we can see a route to the other peer network:

Conclusion

In this post like I mentioned I have been researching the Azure Virtual WAN service and I wanted to share my findings (even though we are barely scratching the surface in this post). I have also been practicing using AzAPI instead of AzureRM and wanted to share my work with that as well. It is not super difficult it's just practicing not defaulting to AzureRM everytime.

If you want to have a look at the code from this post you can find it here: https://github.com/carlzxc71/azure-terraform-vwan

References

About me